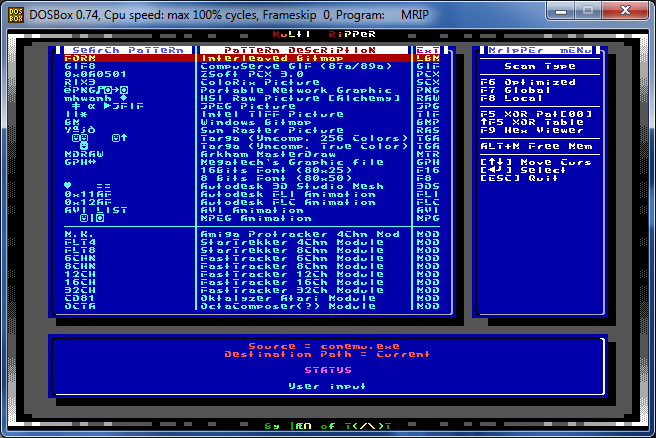

Let's starts by me making sure that the problem is stated clearly: we assume, that we have a large memory blob (anything between 500 MB to 1 TB) and we want to find all raw bitmaps and their width in it. Furthermore, since this is kinda ambiguous, by "raw bitmaps" I mean neither camera RAW formats used in digital photography (NEF, ORF, CR2 and the like) nor "image files" (like PNG, BMP, JPG, GIF, TIFF and the like) - how to find most of these things is of course common knowledge that can be summarized by "find magic or pattern that's commonly at the beginning". This approach has been used by many old school ripper programs like Multi Ripper (seen around in late '90, though I remember such apps from at least a few years earlier) or other similar though older apps, as well as newer stuff like binwalk or PhotoRec. What we're looking for is just plain bitmap data (8/24/32 bpp for starters) without any magic values, headers, compression or other strange encodings.

Where would this be useful? In analyzing various memory dumps or disk dumps where you can't make any smart calls about kernel/FS/heap/app memory structures or if parts of said are missing/have been wiped (so volatility/Slueth Kit are useless).

Usually the way I did this (and still do) was to open the file in IrfanView as .raw, set width to something around 1024, height to a large value, offset to whatever part I was analyzing and then I scrolled through the huge bitmap counting on my brain to spot any patterns. I'm not going to describe the exact details of this method, since Bernardo beat me to it and I have really nothing to add (though his GIMP method seems more friendly as you have a scroll bar to set the width which looks waaay better than putting the number manually in IrfanView). The thing I found surprising about his post is that the CTF task he gives as an example - coor coor from 9447 - is the exact task I had in mind when spawning the discussion with Ange (which later moved to twitter and made Bernardo write his post). Here are three of my findings from that task:

The discussion at twitter included several interesting links/ideas:

- @doegox pointed to his tool https://doegox.github.io/ElectronicColoringBook/

- @jchillerup pointed to the cantor dust talk/tool which doesn't solve the problem, but is (i.e. looks like) probably the best non-automatic tool for this purpose; some patterns remind me of one of my previous blog posts, which spawns an idea I guess on how to find candidate bitmaps in the binary blob.

- @scanlime pointed to the autocorrelation problem, which names the problem I was thinking about and points to the solution

- @hanno pointed to JPEG compression tested on various widths/offsets, which would be another idea to find candidate bitmaps

- @sqaxomonophonen pointed to FFT and looking for spikes, which would be a way to determine the width

- @CrazyLogLad suggested something similar

- @aeliasen said this:

I'd calculate the autocorrelation of the bytes; period with strongest autocorr. should give width. http://futureboy.us/fsp/colorize.fsp?f=correlation.frink You might have to throw out small periods (like 1-3) and divide by pixel depth.Seems I need to do some reading on autocorrelation/FFT to move this forward.

If someone would like to try his luck with any of the two problems ([1] finding bitmap candidates in a LARGE binary blob and [2] automatically determining width of the candidate), the coor coor dump is here (link shamelessly taken from Bernardo's blog):

coorcoor.tar.bz2

If you have any other ideas, comments or links, feel free to add them in the comment section.

Cheers,

Add a comment: